Teaming with Microsoft and the Massachusetts Institute of Technology, the Metropolitan Museum of Art offered a glimpse of the future Monday night (4 February), presenting five digital prototypes that harness artificial intelligence to make use of images of objects in the Met’s collection.

Under dimmed lights with electronic music throbbing in the background, guests in the museum’s Great Hall circulated among strategically placed screens. Visitors could sample applications like Storyteller, which uses voice recognition AI to conjure Met images illustrating whatever words a user utters aloud. (The prototype understands five languages so far.) Also attracting attention was My Life, My Met, which uses AI to analyze a user’s Instagram’s posts and replaces the images with the closest matches to works in the Met’s collection. A National Geographic photograph of an ocean, for example, summoned Winslow Homer’s Channel Bass from 1904.

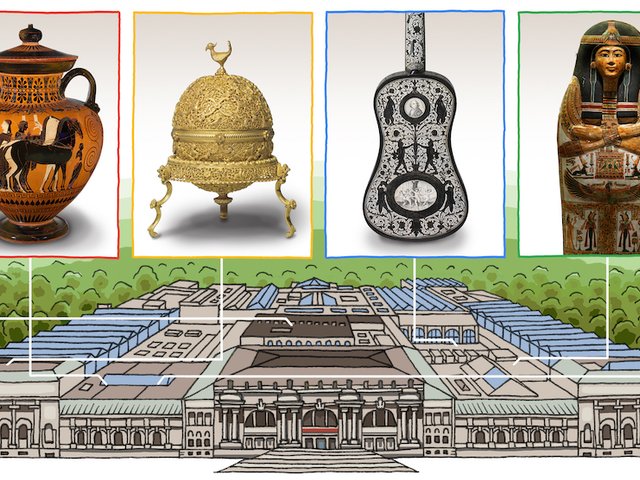

It is the second anniversary of the Met’s Open Access program, which makes over 406,000 high-resolution images of public-domain works in the collection available under Creative Commons Zero. All of the images can be shared and remixed without restrictions. The museum casts the initiative as a way of engaging digitally with people around the globe, and the collaboration with Microsoft and MIT promises to deepen such connections.

“This is a conservative institution by nature,” Max Hollein, the Met’s director, said in brief remarks to the crowd at the outset of the evening. “We need to shake it up.”

The Met said the five digital prototypes resulted from a two-day hackathon last December involving museum staff members and representatives from Microsoft and MIT.

Some of the applications seemed to offer a bit less than advertised. Artwork of the Day, for example promises “to find the artwork in the Met collection that will resonate with you today” by drawing on open data sets like your location, weather, news and historical data. But only a narrow choice of images appeared to be available.

A prototype called Gen Studio proved to be a metaphysical challenge, generating “a tapestry of experiences” based on the “latent space” underlying the Met’s expansive collection. One such experience presented a series of dreamlike images created by AI “that interpolate between artworks”. Finally there was Tag, That’s It!, which offered a way of fine-tuning subject keyword results generated by AI to enhance the accessibility of the collection.

“This is all very new for all of us,” said Asta Roseway, a “fusionist” from Microsoft who addressed the crowd at the event and urged users to keep their minds open. She emphasised, “It’s creativity itself, which is ultimately human.”