The internet is on the brink of another revolution, but not because of starry-eyed startups or out-of-touch tech executives. Lawmakers have been working on curbing tech companies’ “abusive and rampant neglect” of users’ data, privacy and vulnerability. Particularly under the guise of protecting children online, governments in the UK, US and European Union have introduced bills that target the spread of child sexual abuse material (CSAM) and “harmful” content, both of which are core intentions of the recently passed UK Online Safety Act. Companies found violating the act will have to pay millions in fines and may face criminal charges.

Hailed by the UK government as “a game-changing piece of legislation” that will “make the UK the safest place in the world to be online”, the act has free expression groups and creatives worried that it could lead to the exclusion of marginalised artists and groups, and an end to privacy online as we know it.

The UK Online Safety Act became law on 26 October despite the strong opposition of international digital rights groups citing the potential for governmental overreach and chilling of freedom of expression online. Groups such as the Electronic Frontier Foundation (EFF) tell The Art Newspaper that it “will exacerbate the already stifled space for free expression online for artists and creatives, and those who wish to engage with their content”. The law includes a mandate that social media companies proactively keep children from seeing “harmful” content, and a challenging requirement to implement not-yet-existing technology to search users’ encrypted messages for CSAM while simultaneously maintaining their privacy.

The latter is a sticking point for many, including the messaging apps Signal and WhatsApp, which threatened to quit the UK if forced to break end-to-end encryption and users’ privacy. The ability to communicate privately and securely is critical for people around the world to express themselves, share information and collaborate free from governmental or third-party surveillance.

Encryption has long been a point of contention between law enforcement and tech companies, with digital rights groups arguing that giving governments access to private messages opens the door to surveillance and abuse. The recent push by lawmakers to search private messages for CSAM has sparked concerns over how such access could be exploited by conservative and partisan governments who already target marginalised groups and creatives.

Lawmakers in the US and EU likewise see encryption as an obstacle to eradicating CSAM, and have initiated bills aimed at giving law enforcement access to private messages and media. Digital rights groups continue to warn of the dangers. “Journalists and human rights workers will inevitably become targets,” the EFF told The Art Newspaper, “as will artists and creatives who share content that the system considers harmful.”

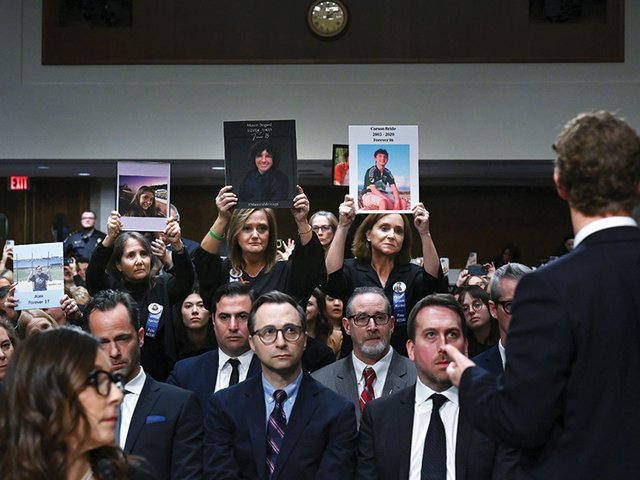

The UK act’s original goal of protecting children online stemmed from significant instances of harm involving social media, and rising concern over young people’s mental health. Grieving parents and a worried public who believe the big tech companies put profits before safety hail the act’s zero-tolerance policies and steep consequences as “ground-breaking”.

In a last-minute response to protests over the lack of protection for women and girls online, the act included novel criminal offences that take a positive step forward for victims of gender-based violence online. Professor Lorna Woods, an author of the violence against women and girls code of practice, believes these “could lead to a better understanding of when the safety duties come into play, understanding when harassment is happening, for example”, and that we could see a shift in how policies are enforced “when understood from the perspective of the threats women face”.

But what is ground-breaking for some may create a sinkhole for many creatives, who already face censorship and inequality online. The requirement for companies to pre-emptively remove or filter content that could be considered harmful to young users has triggered warnings from hundreds of free expression groups and experts who anticipate broad suppression and erasure of legal content, including art.

The US-based National Coalition Against Censorship (NCAC) tells The Art Newspaper: “When faced with potential heavy penalties and legal pressure, social media platforms are understandably tempted to err on the side of caution and filter out more content than they are strictly required to.”

That, after all, has been the effect of the 2018 US Fosta/Sesta law, which made businesses liable if their platforms were used for sex-trafficking, and resulted in artists around the world facing censorship, financial repercussions and suppression. The EFF believes laws like the UK act will encourage platforms to “undertake overzealous moderation to ensure they are not violating the legislation, resulting in lawful and harmless content being censored”.

While a safer internet is needed, digital rights groups and creatives are right to be sceptical of the means by which transparency and liability are achieved, and frustrated by lawmakers who ignore them. The California-based digital rights group Fight for the Future tells The Art Newspaper: “At this point, any lawmaker who gives well-meaning support to these sorts of laws is failing in their duty to consult long-time experts on digital and human rights, and to listen to artists as well as traditionally marginalised communities more broadly.”

Irene Khan, the UN special rapporteur on freedom of expression, concluded earlier this year that “smart” solutions will come not from targeting content, but from reviewing the structure and transparency practices of companies. Likewise, the EU’s Digital Services Act places the onus on companies to be transparent, engage in structural review and focus on users’ rights.

In spite of urging by digital rights and free expression groups to focus on structural rather than punitive regulations, the UK act will probably be followed by similar legislation in the US and beyond. In anticipation, groups like NCAC are urging social media companies to protect artists by “[making] sure their algorithms make a clear distinction between art and material that could be considered harmful to minors so as to avoid the suppression of culturally valuable (and fully legal) works”.